This article first appeared in Data Economy, written by DataBank CEO, Raul K. Martynek.

It’s difficult to read any industry publication today and not see an article about “The Edge”. Most articles seem to assume that it is a foregone conclusion that there will be thousands or tens of thousands of edge data centers in the not so distant future. Most articles attribute the evolution of edge data centers with the introduction of 5G and concepts like Mobile Edge Computing. And, almost all articles mention latency as the primary driver of edge data centers and the need to reduce latency to support a range of applications from autonomous vehicles to artificial intelligence to robotic surgery.

In this article, I would like to examine each of these assumptions and provide some observations regarding latency, the evolution of 5G and what DataBank is seeing in the marketplace today. I will argue the The Edge is here already today, but happening in an overlooked space – inside “traditional” data centers in secondary markets. I will also argue that the incremental benefits of micro edge data centers, for example those proposed at the base of cell towers, would provide negligible incremental benefits to latency and in fact introduce significant operational and technical hurdles to deploying infrastructure over large geographies.

Let’s begin with looking at 5G and how it will impact latency and the decision on where to deploy infrastructure. There is no doubt that the widespread adoption of mobile 5G will have important consequences for the evolution of the modern Internet. Broadly speaking, the two most important benefits of 5G are increased throughput and reduction in latency. Everyone understands increased throughput. VZN’s limited mobile 5G rollout in Chicago and Minneapolis has delivered download speeds of up to 600Mbps. More bandwidth is great and I’m sure we’ll see new higher definition video codecs and other technologies emerge to consume that bandwidth.

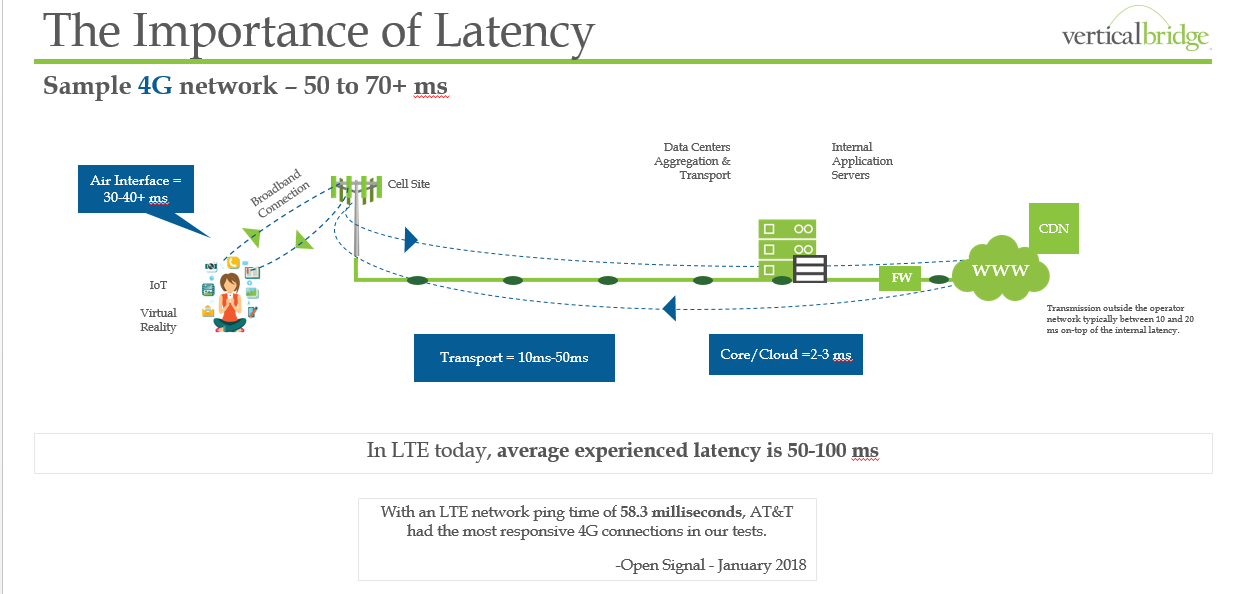

Improved latency is the other big benefit of 5G. What is less understood is exactly how 5G improves latency. Below is a chart (Figure 1) that illustrates how data is transferred by a smartphone and indicates the approximate latency introduced by the various segments of a mobile network. You should note that the majority of the latency in 4G networks is created by the “air interface”, that is, the communication between your smartphone/mobile device and the Radio Frequency antennas mounted on cellular towers. On average over 50% of the latency on 4G networks is caused by the air interface. The latency in the transport and core network is much less significant compared to the impact caused by the air interface.

Figure 1

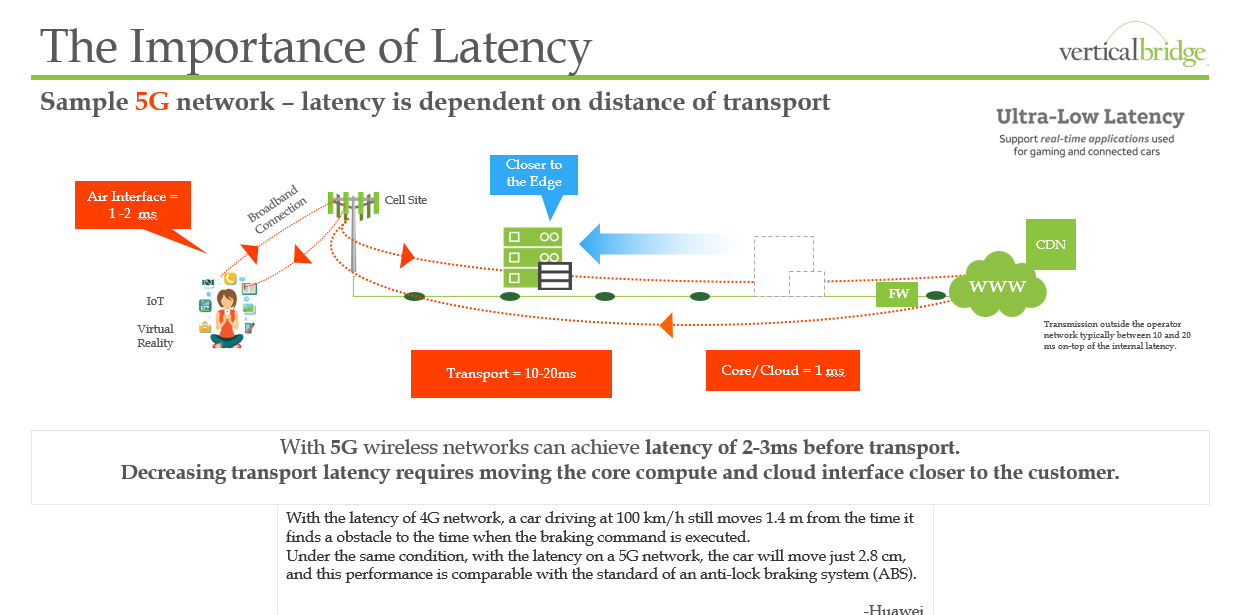

Below in Figure 2 is the same chart but for 5G. As you can see, 5G dramatically reduces the latency of the air interface, dropping round trip times from an average of 50-60ms (or greater) to sub 10 ms. The implications are clear: 5G – even without any change to where data centers are located, will dramatically accelerate application performance even from distant “cloud data centers”. Everyone will get an incremental latency benefit from 5G since the problem with the air interface will be largely addressed and terrestrial network will continue to move at the speed of light. By implication, applications that today (or tomorrow) could not function with the existing latency of 4G networks will perform adequately under 5G. Obviously, it is hard to predict what applications and in what instances, but it seems reasonable to assume that some group of applications will perform sufficiently well under 5G networks that no change in data center topology will be required to support them.

Now 5G does include Multi-access Edge Computing, also known as Mobile Edge Computing, which enables the incorporation of cloud computing capabilities within a cellular network and will be leveraged for applications like IoT. And for some set of applications, MEC will be useful. Our friends at Packet who are supporting the Sprint Curiosity IoT network with their bare-metal cloud is one such use-case. But the latency benefits of 5G will accrue to every mobile internet user regardless of where the application they are using is physically located.

The impact of 5G on future applications reminds me of the evolution of the early internet and development of VoIP. In the bubble era (for those that remember) many people tried to introduce VoIP as an alternative to TDM voice. Nothing worked in the 1995-2000 era as the internet was fundamentally not optimized for latency. As pipes got bigger and peering and caching evolved, VoIP over the open internet became possible. This is the opportunity that Vonage exploited and came to market with an OTT voice application in the early 2000s, something that could not have been commercially viable a few years earlier. I believe the same thing will happen with the introduction of 5G.

Figure 2

But let’s dig a little deeper into the latency argument for edge data centers and examine the incremental benefit of deploying compute and storage infrastructure closer to end users in a given metro market.

Before we do, it’s helpful to recall how most large-scale internet applications are configured today from a data center footprint perspective. Recently, we bid on a 2MW requirement for an ad-tech company who was looking to establish a footprint in Dallas. Ad-tech is one of the most latency sensitive internet use-cases. For their business model to function, they need to be able to identify the characteristics of an internet user on one of their web properties, determine their potential buying habits, conduct an auction to a group of ad buyers and insert the ad into the web page in under 100ms. Wow. The ad-tech firm had conducted significant network testing from various markets and determined that to be able to achieve national sub 100ms response, they would need to deploy in three US locations: Ashburn, Dallas and Santa Clara. This East, Central and West configuration is how most web scale applications are deployed. This is precisely why the largest wholesale data center markets are in Ashburn, Dallas and Santa Clara.

So this is what works (in broadly the same form) for most of the existing internet application use cases today. The argument for micro edge data centers is that new applications will require near real time latency and for that an East, Central and West configuration will not work. Instead, you will need to deploy at thousands of locations. Fair enough, so let’s examine the improvements in latency if we deploy infrastructure locally as opposed to solely in an East/Central/West configuration.

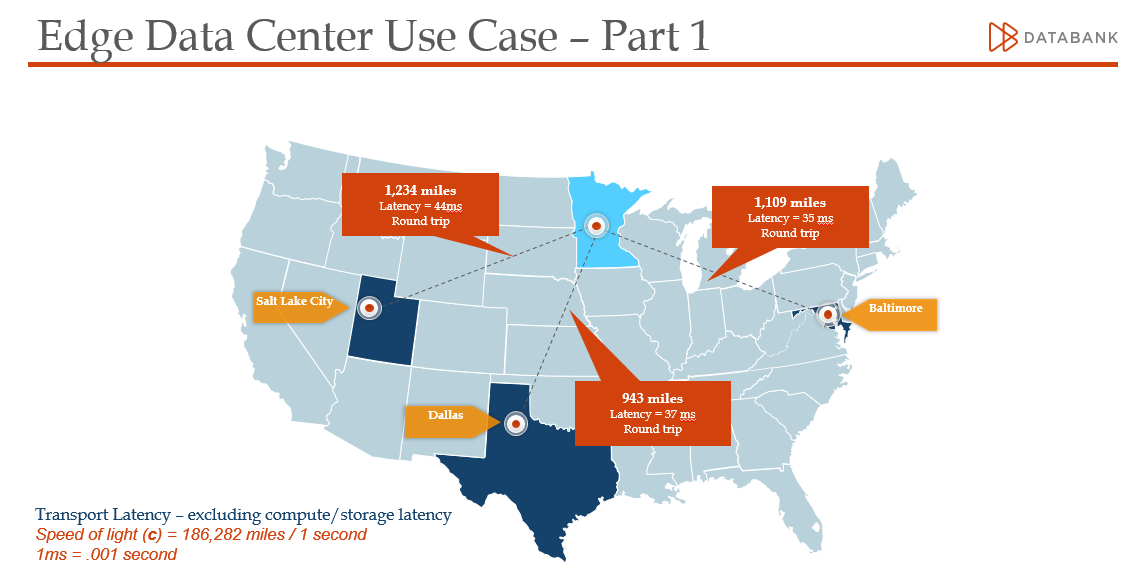

Figure 3 below shows a map of the US and expected latency from East/Central/West configuration to Minneapolis, MN. For the purpose of this exercise we used the actual DataBank locations in SLC, DFW and BLT. I would imagine the latency to Santa Clara and Ashburn would add another 5-10ms. I picked Minneapolis because it’s geographically most distant from the most common wholesale data center locations that web scale companies would likely deploy (exclude Chicago for the moment) so the benefit of reduced latency would be most pronounced. In addition, DataBank has two data centers in Minneapolis so we have firsthand knowledge of the available data center options and IP network dynamics in the market.

Figure 3

As you can see, the latency to Minneapolis introduced by terrestrial fiber transport is less than 45ms over the open internet from East, Central and West locations. Actual latency on a private MPLS/backbone network would be about 5ms-10ms lower. Recall that with 5G, the latency of IP packets will be greatly reduced even without the introduction of micro edge data centers since there will be significant improvement in the air-interface. This means that with 5G, most cloud data centers will be 25ms-50ms away from end-users, a significant improvement over 4G.

Nonetheless, let’s say you are a provider of an application that demands very low latency and looking to serve users in Minneapolis. What would you do? You would have two broad options: either deploy in a “traditional” data center within Minneapolis, for example, at either the DataBank MSP1 or MSP2 data center, or split your workload and deploy in a larger number of smaller micro data centers within Minneapolis. Let’s look at the incremental benefit of deploying in 5 micro edge data centers compared to deploying in a single traditional data center.

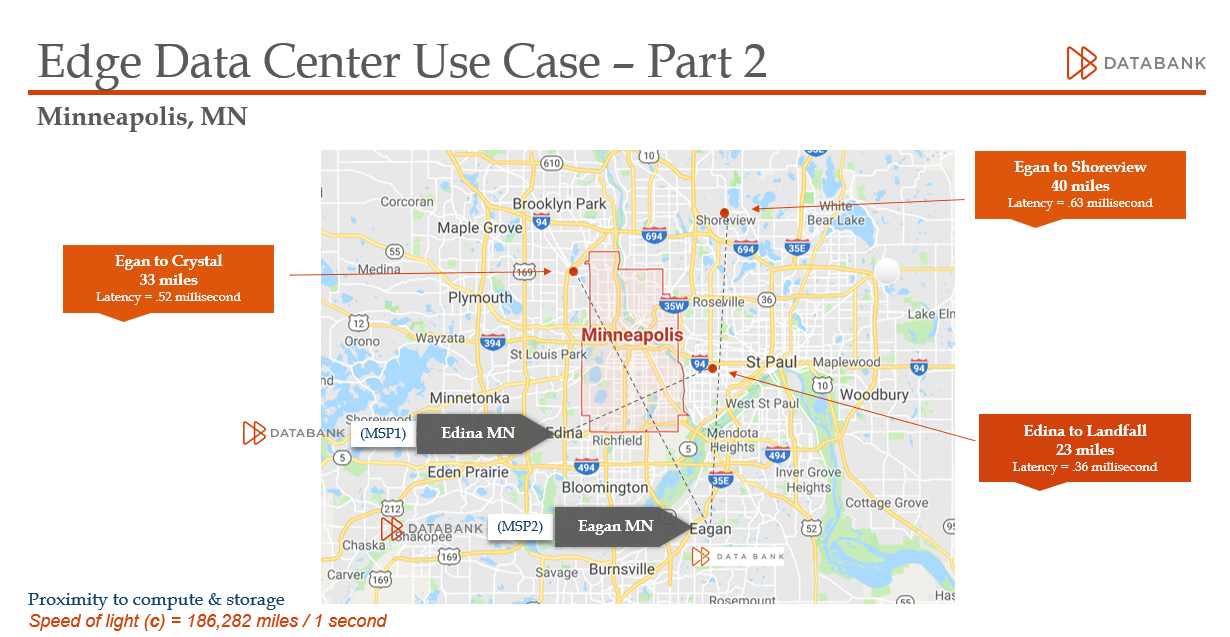

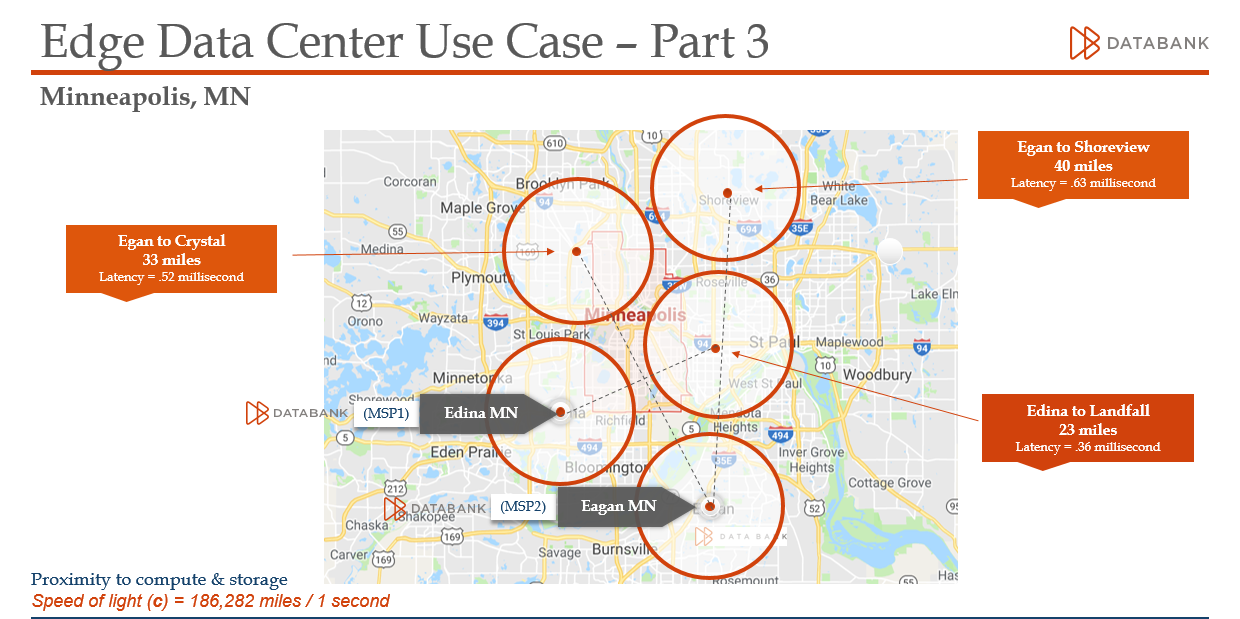

Figure 4 below estimates what your expected latency would be to various locations within the Minneapolis market if you deployed in a traditional data center like MSP1 or MSP2. If networks were perfectly optimized whereby an end user packets could go directly to the data center “as the crow flies” instead of having to hairpin through a peering point or carrier hotel, the latency would range from .16ms (for 10 miles) to .63ms. In reality, one would have to add the incremental latency to get to the carrier hotel or peering point in the market- but that will affect all users and all examples equally. Our ping tests indicate that from either MSP1 or MSP2, applications would be able to reach most destinations in the greater Minneapolis metro area within 3ms-5ms or less.

Figure 4

Figure 5 shows what would happen if you chose to deploy in 5 micro data centers, with MSP1 and MPS2 as two of the locations. In this scenario, your theoretical end user would be almost always within a 10 mile radius of a micro data center and be able to achieve ~.16ms round trip latency (Again, assuming networks are perfectly optimal…which they are not). But the incremental improvement from going from one data center location to 5 micro data center locations only improves your round trip latency by less than 1-2ms. Compared to an East/Central/West configuration, the vast majority of the latency benefit happens by simply choosing to deploy 1 node within a metro market. Deploying in tens-hundreds-thousands of micro-data centers would only improves latency by 1ms or less, and in some cases introduce latency depending on where the peering occurs.

Figure 5

When you consider the complexity, cost and operational support needs of deploying infrastructure in the field coupled with the benefits of scale that comes with aggregating infrastructure in a single location, the single data center deployment to serve a metropolitan market is superior. The conclusion is that once an application is deployed in a single location in a given market the actual latency to reach any eyeball in that market is dramatically reduced compared to the common East/Central/West configuration of web scale data centers and the incremental benefits of micro data centers evaporate.

We do business with many of the top 20 largest cloud and content players and we do see those customers leveraging our traditional data centers geographies in secondary markets to expand their capabilities. It seems logical to us that before the large cloud and content players deploy at 10,000 cell tower locations, they will first deploy a single cluster in a traditional data center in the top metro markets that they are looking to service and be able to reach any eyeball in those geographies with very low latency. In large geographic markets, for example LA metro, the provider can easily choose 2-3 traditional data centers already in the market.

Spend enough time in the telecom and technology industries and it becomes clear that the hype of many new technologies usually precedes the reality by 5-10 years. We believe that is the case with micro edge data centers. While it’s possible that at some point in the future new applications will emerge that we can only dream of today that will require an even more distributed data center geography, our view is the smart money will focus on this “medium edge” of second tier markets where cloud and content deployments are happening today.

Discover the DataBank Difference today:

Hybrid infrastructure solutions with boundless edge reach and a human touch.