By Eric Swartz, vice president of engineering, and Jenny Gerson, senior director of sustainability – DataBank

With great power comes great responsibility. The growing demand for compute power brings greater demand for associated cooling – and the ability to do so sustainably. This blog addresses current and potential cooling options, and we’d like to know your thoughts, too. In fact, we’re planning an event on the topic and will use your feedback to build one that’s relevant to your business. Please share your thoughts at feedback@databank.com.

Demand is escalating rapidly for data centers to provide cooling solutions that keep pace with the dramatic adoption of artificial intelligence, High-Performance Computing, and graphics-intensive applications. At the same time, low-latency needs are driving the stacking of compute resources in the smallest footprint possible to maximize compute output and reduce the cost of expensive cabling.

These workloads require High-Density server racks that go beyond the capacity of air cooling. As a result, enterprises and colocation data center providers are looking toward liquid cooling options. In the past, the lack of an absolute need (paired with a generally negative perception within a multi-tenant environment) for liquid cooling limited development and resulted in few options and high set-up costs.

However, systems have become so power-dense that there is just no other option, thus driving the need for liquid cooling. Fortunately, today’s technologies make it possible to efficiently deploy piping and fittings that encase liquids and deliver cooling closer to CPUs, GPUs, and memory chips.

3 Ways to Leverage Liquid Cooling

- One common cooling approach is liquid-to-the-rack, which uses cooling coils inserted into the rear doors of rack cabinets connected to the data center’s chilled water loop. This advances the technique of running chilled water through a Computer Room Air Handler (CRAH) on the perimeter and blowing cold air into a data hall. The chilled liquid goes directly to the back of the cabinet doors. The hot air is cooled as it comes out the back of the cabinet—instead of having to travel all the way to a CRAH to get cooled.

- For additional cooling capacity, some enterprises are turning to servers with direct-to-chip cooling. Inside the servers, tubes filled with liquid connect to cold plates sitting on top of the chips.

Instead of a fan blowing air over a heat sink to disperse heat, liquid runs through micro-channels within the cold plates — without touching the server — to extract heat from the chips. Servers with these designs attach the cold plates to high heat loads like CPUs, GPUs, and memory chips. Components generating low heat, such as capacitors, are still cooled by air.

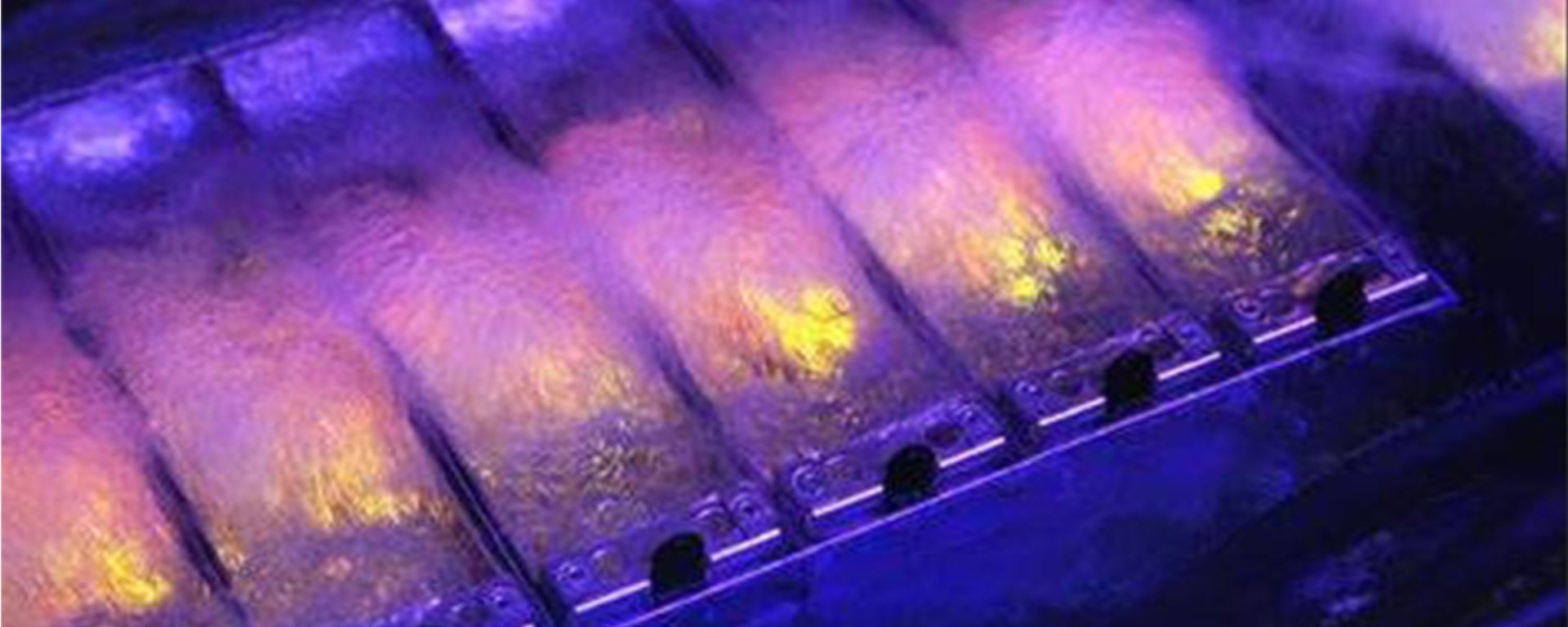

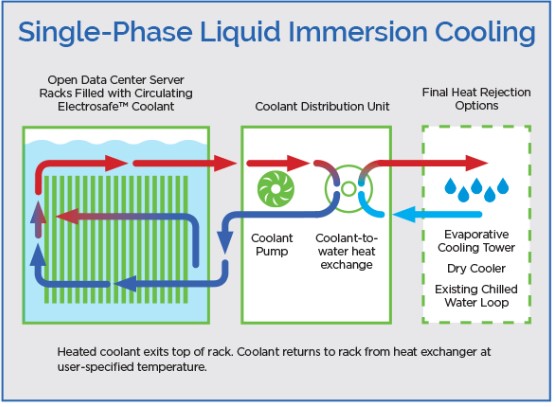

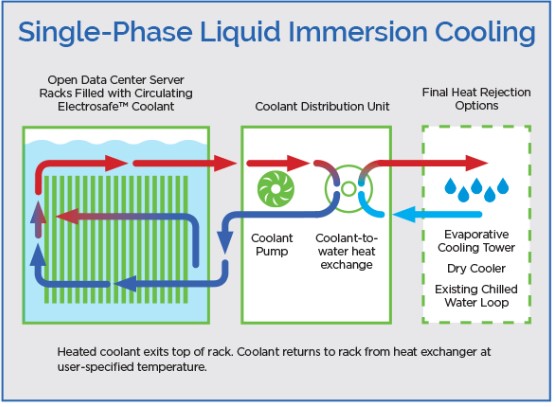

- The most advanced technique is immersion cooling—the servers sit in a tub of thermally conductive dielectric liquid, typically a mineral oil substance:

All the heat created by the server components is captured by direct contact with the dielectric liquid, which is capable of absorbing significantly more heat than air, for the same volume. Through convection, the warm liquid rises in the tub and flows over the top into a vat, where it is cooled by pumping through a heat exchanger and back into the bottom of the tub.

All three cooling approaches can use a closed-loop heat rejection system to reduce water consumption and operational costs. Once a loop has been filled, the liquid would need to be replaced only if there’s a leak or during maintenance. During maintenance, the systems might lose just a few gallons of liquid, but the rest can be continuously recycled.

A Comparison of Kilowatt Capacities

In today’s colocation facilities, when creating an environment for an application workload in a High-Density rack that requires liquid cooling, the chosen technique is usually based on what the data center provider can bring online quickest. Looking ahead, colocation providers will pre-design data hall cooling systems based on the density levels they want to offer to their customers.

As an example of the densities that the various cooling techniques can handle, a standard air-cooled rack would max out at around 40 kilowatts. Racks with chilled-liquid doors can push to 60 kilowatts or even more, depending on the conditions. Chip and immersion cooling take racks even further to 100 kilowatts and beyond.

A Positive Impact on the Environment

A key sustainability benefit for purpose-built data centers offering immersion cooling is the reduced environmental impact. Because the dielectric liquid is in direct contact with every component of the server and captures heat better than air, the liquid can run at significantly higher temperatures than air-cooled systems.

Consider how chips within High-Density environment servers can run upwards of 150° Fahrenheit. Because the liquid in which the servers are immersed is so much more efficient than air at absorbing heat, it can comfortably run at temperatures of over 110° compared to the 70°-80° at which air conditioners must run to cool servers. The high operating temperature allows outside air to be used for heat rejection using nothing more than pumps and fans to reject heat outside.

This enables some immersion systems to be deployed in a way that eliminates the need for refrigerant-based mechanical cooling.

Pioneer Breakthroughs Rely on Combination of Air and Liquid

Some data centers are considering innovative uses of the heat that their water and liquid cooling systems absorb from server racks. It’s possible, for example, for a data center to pump heat to nearby buildings to keep them warm during the winter, as we have put in place at neighboring office space at our ATL1 facility.

Air Is Still Here to Stay

Air cooling is not expected to go away completely. Given the highly specialized requirements for deployments like direct to chip and immersion cooling, not everybody will be able to make the switch to these cooling techniques. Also, most data centers will always have low-density servers and secondary server components of High-Density racks that can be cooled with air.

To provide sufficient cooling for all densities of server racks (while also conserving energy), data centers will ultimately need to deploy a combination of liquid and air-based systems. As rack density increases, the key will be to move the cooling mechanism closer to the server or the chips in order to sufficiently remove the heat.

To learn more, watch for an upcoming DataBank event on data center cooling techniques. In the meantime, we would like to hear from you about your experiences with air, water, and liquid cooling: Is one technique working better for you? Do you rely on a combination of cooling techniques? What have you done to control costs?

Remember to share your thoughts and questions about cooling your own IT environments by emailing us today at feedback@databank.com.

See these related sources:

Colocation for High-Performance Computing (HPC) DataBank’s Universal Data Hall Design

It’s a Hot Market, but How Cool Are These Doors? DataBank Uses Unique Cooling Solution in Georgia Tech Data Center

Colocation – Flexible Space with Scalable Power and Cooling