By Rob Lerner, Vice President of Sales and Cloud Strategies, DataBank

Rob Lerner, Vice President of Sales and Cloud Strategies at DataBank, stresses the concept of application portability and shows how it can help companies generate a win-win-win in terms of cost, performance, and geography.

As companies think about designing and hosting applications, workloads, and other assets, many already understand the benefits in options such as hybrid hosting models or environments designed to support agile development methods. Yet, we now think the industry should focus on a new paradigm above all else: design for portability.

As the name suggests, portable application design gives companies the ability to move applications and data from one type of compute environment—cloud, hybrid cloud, on-premises, or co-location—to another with minimal disruption. This architecture strategy delivers maximum flexibility and best positions companies to quickly shift services to meet changing business needs in the future.

Consider the case of a company developing a brand-new application, such as a SaaS solution. In the beginning, it makes sense for this company to host the application in the cloud, especially if the company doesn’t have resources or budget to support on-premises hosting and management. However, as the application becomes more popular, it begins to collect and store much more data, which can lead to surprisingly high egress and other transaction fees that often come as a shock. Worse, cloud-native products and code are not easy to port into another cloud provider’s technology, contributing to vendor lock-in and the loss of technology flexibility.

Fine Tune the Metrics that Matter Most

Portable design overcomes these issues and helps companies strike the balance between three critical measurement “dials”: cost, performance, and geography. For example, if companies are looking to reduce costs, they can migrate data-intensive workloads to a lower-cost provider or alternative hosting model. If they want to increase performance, they can move a performance-demanding workload to a best fit cloud provider in real time. If they want to host applications in a particular region—to improve network latency times or reach consumers at the edge—they could use cloud partners in the ideal location or locations.

Best of all, portable application design enables companies to optimize the results across the three dials. It doesn’t force IT leaders to rank priorities, but instead, provides the complete flexibility to help them achieve the balance that is right for their business on an ongoing basis.

It is important that companies start thinking about portable application design as early as possible, though. If they rely too much on tools that are native to one specific cloud provider, they are inadvertently painting themselves into a corner because it simply may be too expensive or difficult to re-design to a portable architecture later. (We’ll describe this in more detail below.)

Portable Applications at a Glance

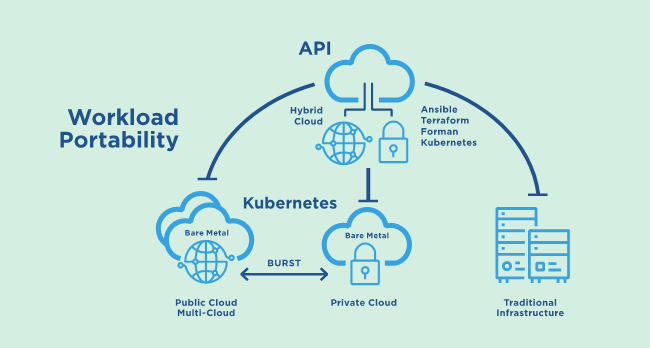

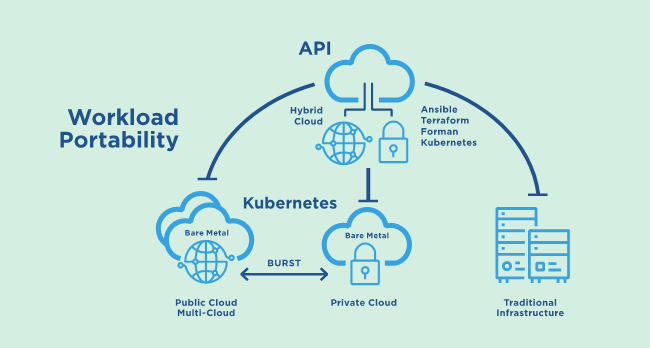

The image below depicts a portable workload infrastructure, which balances auto-scaling with the cost savings of better-utilized resources by sharing workloads across the infrastructure:

Within this architecture, if your business experiences periodic spikes in activity, bursting workloads to the public cloud during those times offers the best economics. When the workload is relatively consistent, a private cloud or a colocation data center will, most likely, reduce the long-term costs.

Native Cloud Technologies Reduce Portability

To demonstrate the importance of portability, consider the case of an IoT company that deploys thousands of thermostats, motion sensors, cameras, and microphones for customers who manage multi-building properties such as hotels, casinos, and college campuses. Knowing that services might need to scale quickly during busy periods for customers—such as a big event at a hotel or casino or the beginning of a college semester—the IoT company decided to develop its platform to manage and monitor the devices using technologies that were native to its hyperscale public cloud platform.

The platform-native approach seemed to make sense at first. After all, it was easy to quickly deploy applications in the cloud infrastructure, but one year later, the hyperscale environment was needed only 20% of the time. During the remaining 80%, the IoT company was essentially paying an elasticity tax on compute resources that were dormant during low workload time periods. Plus, the cost to host the environment increased rapidly as the infrastructure began to accumulate large data sets.

This is a case in which, once the initial workload stabilized, it would have made sense to move the base infrastructure into a virtual private cloud where the compute costs are lower. The IoT company could also set up on-ramp interconnects to a hyperscale cloud platform to expand compute capacity cost-efficiently when the workload spikes occur. However, this approach is predicated upon developing an initial environment utilizing application container management tools, such as Kubernetes, and provisioning tools, such as Ansible and Terraform, which are open source and can function in any cloud, on-premises, or colocation data center.

In this case, the IoT company relied on technologies that were native to the cloud platform provider. Porting such an environment to another cloud provider or a colocation or on-premises data center would be costly. If the company had avoided the native approach, they would have had the flexibility to easily port their applications to any cloud environment and leverage a hybrid approach that allows them to migrate workloads according to user demand.

Upfront Sacrifice Generates Long-Term Savings

The cloud is a great place to give birth to an application. It’s the fastest way to make the application available to end-users, and if the workload demand explodes, you can easily scale compute resources to ensure positive user experiences.

However, as the application workload begins to mature by stabilizing and accumulating large data sets and transactions, the costs of the cloud can quickly escalate. That’s when it often makes sense to move a portion of the infrastructure to a colocation data center or private cloud environment.

The key is to design for that portability now. As you evaluate IT infrastructure partners, assure they have these now or within their product roadmap:

- Industry-standard APIs using open source technologies for mobility among environments in the cloud, on-premises, and in colocation data centers

- Containerization so that your virtualized infrastructure shares the same operating system

- Cloud on-ramps to facilitate your application’s portability

- Security to protect your data as it moves to and from the cloud

By avoiding the temptation to develop applications with technologies that are native to a particular cloud platform you might sacrifice the ease of deployment upfront, but you can generate long-term cost savings for your company while giving your business the flexibility it needs—now and in the future.

About the Author: Rob Lerner is a 22-year industry veteran who has held leadership positions in solutions engineering and sales, always holding a customer-first mentality.